Blocking Inappropriate Images in Search Results

Most Internet porn filters apply the all-or-nothing approach to blocking websites and typically don’t block popular search engines, rightly so. These search engines are the key to the internet’s potential. While they are generally well protected using features like safe search, there are also glaring misses, especially when one looks at the images served up by the search. Seemingly innocent searches can throw up inappropriate images and several search engines optimize their performance by embedding images into the html as a data. This breaks most internet filters since filters operate by blocking entire websites using a static list.

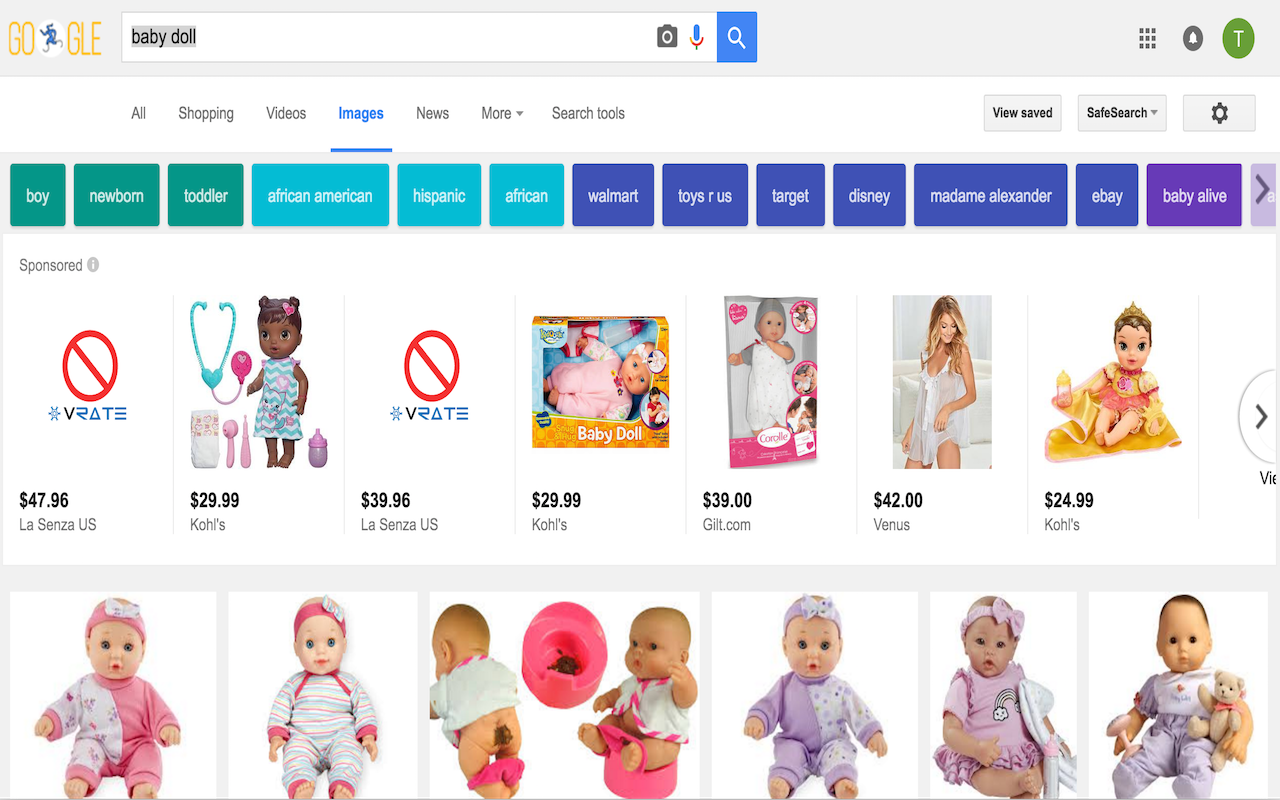

Enter the vRate Chrome extension. By running within the browser, vRate has the ability to analyze content within the page, even if the image is embedded as data instead of a URL. vRate overcomes what traditional internet filters have failed at, by applying a combination of approaches to block inappropriate content. In addition to using static blacklists, vRate analyzes dynamic content on the page, specifically images and video thumbnails to filter inappropriate content. Seen below is a sample search result with vRate enabled.

We tried searching an “innocent” key word. In this case vRate replaced inappropriate images inline, this is because the search results for the most part were benign, except for the odd one or two. However, vRate can also automatically re-direct to a block page if the number of inappropriate images exceeds thresholds and this can be controlled through our sensitivity settings.

The Chrome extension is currently available for free preview, so do download and test drive it, especially check out how search results are handled.

Deep Neural Networks

DNN’s unsupervised feature extraction alleviates issues with feature engineering, greatly improves accuracy for Nudity detection (a relatively complex image classification problem) in vRate.

In a recent post, Ran Bi (NYU) discusses how DNN is prone to ‘missing the big picture’ by focusing on what it recognizes as a feature from familiar images which it has been trained on. His argument is based on a study by researchers from University of Wyoming and Cornell University who generated images completely unrecognizable to human eyes while getting DNN to still label them as familiar objects (such as cheetah/peacock/baseball/…) with 99.99% confidence. An interesting read with a precautionary quote:

“This may be another evidence to prove that Deep Neural Network does not see the world in the same way as human vision. We use the whole image to identify things while DNN depends on the features.”

Extending vRate’s approach to successfully detect nudity in images leads to solving problems involving broader classification and rating of media content when scaling an effective use of DNN cooperation with other classifiers.

Optimized Nudity Detection

A few years back, one of the feature extraction methods implemented in vRate was a Nudity Detection algorithm designed to compute the probability of nudity in an image frame. Primary design goal for this method was to speed up the real-time processing for vRate’s Media Analysis Webservice queries, while maintaining a high accuracy rate, and minimizing false negatives.

For scenarios where accuracy is of primary concern, template-matching is a robust alternative to other faster approaches such as simple skin detection. To demo this algorithm, the team released a vRateLite© solution that implemented an intermediate method (vRate_Nudity_Detection_Lite) that introduced simple heuristics to compute a probability by aggregating skin percentage values for blobs detected in the foreground of the image frame. This eliminated requirements for lengthy comparisons between the target image and a library of templates with favorable processing speeds.

Next generation of vRate Nudity Detection algorithms incorporated template-matching to handle frames with high risk of skin exposure as well as grayscale or monochromatic images.

vRate Smart Internet Filter Chrome Browser Extension

We are excited to announce the availability of our new and innovative Internet filter on Chrome. The browser extension leverages the same high performance API to analyze images for inappropriate content and filters such content live during browsing. We compared several competitor products during our beta testing and were pleased with the results so far.

To download, please click on https://vrate.net/#!/internetFilter

Using Multiple Classifiers to Improve Accuracy

Our earliest algorithms were quite primitive compared to current trends. To improve accuracy, we applied several classifiers to detect nudity, including an ensemble of skin based classifiers, pattern detection, skin blob detection and feature detection.

With the recent advances in deep learning, the use of some of the past techniques have become ineffective. One could still combine the output of deep learning to build an ensemble based classifier, however you still have to deal with the same complexities of combining multiple predictions, assigning weights among others to deliver improvements in accuracies.

Within vRate, applying multiple methods, which have been tuned over time, have helped us deliver automated nudity detection which is both effective and economical. In upcoming posts, we hope to share our journey in technology right from the early days in 2009.